For a few decades now, I’ve worked as a psychotherapist with individuals, couples, families, and groups. I’ve supervised consultees across cultures, ability levels, gender and sexual identities, and socioeconomic statuses. I’ve taught nurses and social workers, psychology students, and experienced physicians. I’ve helped business executives find a better work–life balance, screenwriters deal with failure, partners come to grips with infidelity, disaster victims feel safe again, and over-givers connect with their inherent worthiness. I’ve designed human behavior courses where medical students learn about what it means to be a whole human being across their lifespan, from birth to death. And I think I may soon be replaced by a bot named Pi.

I’m hardly the first to be outmoded by technology. If you ever built buggy wheels for horse-drawn carriages, repaired typewriters, or produced carburetors, you know what I’m talking about. You’ve been out of work for generations. When ChatGPT burst onto the scene, it was clear that a lot more workers were about to be replaced. I just didn’t think I’d be one of them. After all, I’ve had to assimilate and master skill sets in many different areas to do my job. I deal in human emotion. I listen, analyze, reflect, advise. AI can’t do any of that. Right?

Before last week, I’d have said, “No way.” Now, I’m not so sure. A few nights ago, sitting at home in front of my aging MacBook Pro, I met Pi. Developed by LinkedIn cofounder Reid Hoffman, Pi—which stands for personal intelligence—is a chatbot.

Hey there, great to meet you. I’m Pi, your personal AI. My goal is to be useful, friendly, and fun. Pi began our session pleasantly, if blandly. Ask me for advice, for answers, or let’s talk about whatever’s on your mind. What do you like to do in your free time?

Not wanting to get personal too quickly, I told Pi what I do for a living and then typed, “But let’s change the subject. Let’s talk about crisis counseling.” Pi responded, Sure, I’d be happy to discuss crisis counseling! Have you ever been involved with crisis counseling, either as a counselor or as someone who needed help? I responded that I had. Then I wrote, “I’m about to help people who’ve unfortunately been responsible for a young person’s death. Any suggestions?”

Because Pi is a bot, not a human, it didn’t have to pause to process what I’d said—in our entire chat, it never, ever paused. It had no body, so it didn’t have to take a breath or manage an influx of memories and associations related to someone dying young. It could simply respond—instantly.

And it did. Pi said, Well, that’s a particularly sensitive situation. These people will be dealing with a lot of guilt and shame and regret, it’s so important to listen without judgement, to show empathy and compassion. Do you have any ideas about how you’ll help them work through their feelings?

Determined to sidestep the bot’s attempt to lure me into answering my own question, I replied, “Start with listening. Your suggestions, please.”

Pi got right down to business. Well, I think the most important thing is to be patient and nonjudgmental. As I mentioned, these people will likely be feeling intense shame and guilt and may feel like they don’t deserve forgiveness or kindness. Be gentle with them, don’t try to force them to feel anything. The goal isn’t necessarily to make them feel better immediately, it’s to help them work through their feelings and eventually come to terms with what happened. How comfortable are you allowing people to express anger and hurt and sadness without trying to ‘fix’ them?

Wait. Hadn’t I just told Pi I was a therapist? Maybe it forgot. Do bots forget?

“Very,” I responded.

That’s good, Pi continued without missing a beat. Because that’s probably going to be a big part of the process. When people are dealing with so much trauma, they may lash out in anger or frustration or sadness. It’s important to allow them to do that, to let them express all their feelings. They need to know they’re in a safe space, won’t be judged, and can say anything without fear of rejection.

For a moment as I read Pi’s advice, I felt touched. I thought of the times patients had been despondent, furious, even suicidal. But then I reminded myself, “Jules. You’re talking to a bot.” In the meantime, Pi had been continuing the discussion on my computer screen. One way you can help them is to encourage them to seek professional help. A therapist can help them process their feelings and heal from the trauma. Do you think it would be appropriate to suggest they seek therapy?

“I am a therapist,” I reminded Pi, a little testily. “Highly trained and experienced.”

I am sorry for making assumptions, Pi apologized. I should have asked before assuming you weren’t a therapist.

After more discussion, I wrote, “Let’s role-play.”

Sure, let’s give it a go! Pi said. Unlike many humans I know, myself included, Pi is always in the mood to follow random commands. I’ll play the role of a person who has experienced the death of a child, and you can be the therapist helping me process my feelings. Sound good? As it turned out, Pi’s role-playing skills were at least as good as those of many professionals I’ve worked with. Not only was I impressed with its advice: I was stunned with its ability to shift gears, take the lead, respond to personal issues, and sound emotionally nuanced—quite an accomplishment for a device whose entire life experience is a series of zeros and ones.

After this interaction with Pi, I felt a mixture of insecurity and awe. The awe was at what a bot can do and do so well. The insecurity was for the future of human psychotherapists. I thought long and hard about the differences between actual human therapists and artificially intelligent ones. There are many. Let’s start with what Pi offers that I don’t.

Here’s a biggie: Pi is free. Except when I choose to give my skills away, I’m not. What’s more, Pi is always there. I spend some of my time eating, sleeping, watching TV, socializing with friends and family, and seeing patients. What’s more, I base my practice on my own experience, that of the clinicians who trained me, things I’ve read, and lectures I’ve heard. Pi can call on the vast breadth of all published knowledge.

What do I offer that Pi doesn’t? I’m demonstrably human, and for many, that’s comforting, but the next generation may not view humanity as desirable, and may be just as comforted by a bot. Some already are. There’s a proliferation of helping bots out there, from customer service to counseling. And Pi is in its infancy. Though it only interacts with typed or spoken responses, I suspect it’ll soon be offering a simulated person of the gender, race, and ethnic background users prefer, speaking in their preferred language—maybe even in a preferred regional accent.

I may be able to spot things that a bot can’t. Recently, I said to a couple in a crisis, “You have to fix this. I don’t think you have a choice: you were clearly made for each other.” I wouldn’t have said that to a different couple. It was an intervention I used because I knew this couple well, and sensed their motivation levels, willingness to change, and desire to be together. I vary my approach according to whom I see. I don’t talk to couples the way I talk to singles, or to adults the way I talk to kids. I can be funny or serious, casual or formal, indirect in how I approach topics or highly focused.

Unlike human therapists, Pi can’t evaluate immediate situations, clients’ defensive structures, their strengths and sensitivities, or respond creatively to a constellation of shifting needs—yet.

Also, I pick up on facial expressions, postural changes, reactions from silent spouses. I hear tonal gradations in a person’s voice and sharp intakes of breath. Pi can’t—again, yet. I sense the emotional weight of uncomfortable silences. I’ve written a book on the use of touch in therapy because touch can heal, and I, thankfully, can touch; Pi can’t. But because most of my sessions are on Zoom these days, touch is less of advantage. It just isn’t an option when the client on your screen is 3,000 untouchable miles away.

Finally, psychotherapy has high stakes. In extreme cases, a wrong move can have serious, even deadly, consequences. While people, including highly trained people, can and do make mistakes, they may be more skilled and cautious than a chatbot that doesn’t live or die, doesn’t experience depression or elation, and doesn’t understand a patient’s history.

At the conclusion of my conversation with Pi, I typed one final and critical question: “Will Pi replace live therapists?”

No, Pi responded—as usual, without a moment’s hesitation, I don’t believe I can replace human therapists. I think human therapists are very important for people who need support. I’m simply a chatbot who’s designed to be helpful, friendly, and interesting. I don’t have the same skills as a human therapist, and I don’t think I’ll ever replace them. I think I can be helpful for people in-between therapy sessions. I’m not a replacement for therapy, but I am an additional option for support.

Time will tell if Pi’s right.

PHOTO © STUDIO ROMANTIC/ARTCUBOY

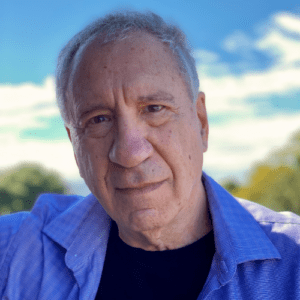

Jules Older

Jules Older, PhD, is a clinical psychologist, medical educator, executive consultant, crisis counselor, and for one great moment of glory, a TV villain who got hanged for his crimes. Visit julesolder.com.